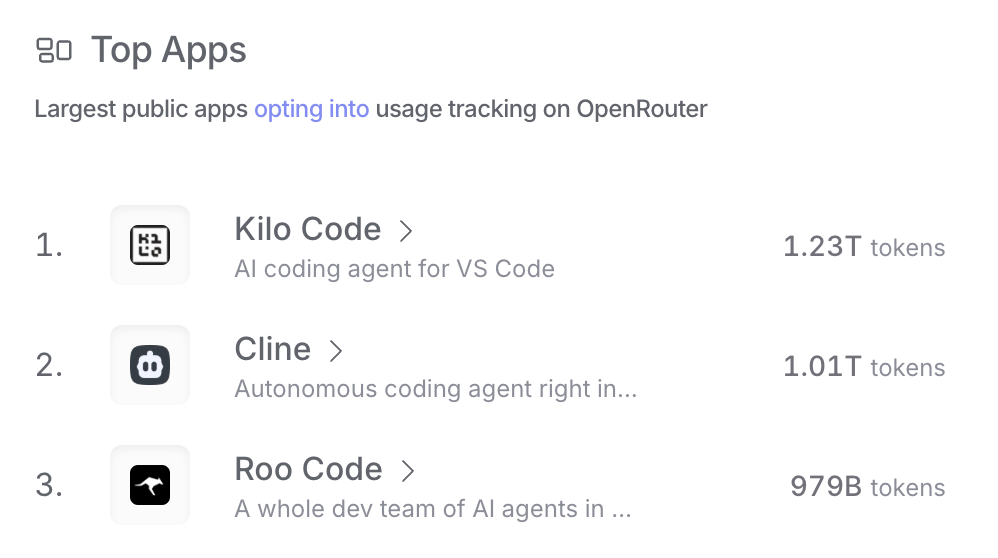

The AI industry is heading toward a cliff. As Kilo’s recent milestone shows—breaking through 1 trillion tokens per month on OpenRouter—we’re witnessing explosive growth in AI inference costs.

Kilo’s token usage on OpenRouter breaking through 1 trillion tokens per month

Kilo’s token usage on OpenRouter breaking through 1 trillion tokens per month

Developers face an impossible choice. Pay for premium subscriptions and hit usage limits. Or use your own API keys and risk catastrophic bills. The stark reality? Developers can easily burn through $100 per day on token usage alone—I’ve seen it happen to my developer friends firsthand.

But what if the problem isn’t the cost of AI itself, but something more fundamental? What if the tools we use every day are actively working against our AI agents?

My $200 Wake-Up Call

I learned this lesson the hard way. My first week experimenting with Cline, an agentic command-line tool, and Gemini 2.5 Pro seemed magical—until the Google Cloud Platform invoice arrived: $200 for two weeks of coding. The agent had been blindly reading entire codebases, running unrestricted database queries, and consuming tokens like there were no limits.

That’s when I switched to Claude Code Max 20x at $200/month—at least the costs were predictable. But even with this premium subscription, I still hit usage limits and saw the dreaded message:

[INFO] Usage threshold reached. Switching to Sonnet for subsequent requests.Suddenly, the quality dropped. Complex refactoring became error-prone. The agent struggled. All because third-party tool invocations consumed my token budget blindly. This experience revealed a deeper truth: the problem wasn’t just my workflow; it was the tools themselves.

The ‘Token Blindness’ Problem

The real issue is that our command-line tools were built for humans, not AI agents. This is a critical design mismatch. A developer can visually scan 10,000 lines of logs, using color-coding and pattern recognition to spot a red error message in seconds, costing nothing. An AI agent, however, must consume every single character as tokens. It can’t ‘scroll past’ or ‘glance over’ irrelevant data. That 10,000-line log costs thousands of tokens, even if 9,999 lines are useless.

This is token blindness: AI agents execute commands with no idea how many tokens they will consume. They’re operating without the context needed to make cost-effective decisions.

Even state-of-the-art tools like Claude Code, used by millions, suffer from this. While its 14 built-in tools are token-efficient, the moment it calls any external CLI tool—docker logs, psql, git diff—it’s completely blind. It has no idea if it’s about to consume 10 tokens or 100,000. This blindness is the direct cause of evaporating subscription credits and shocking pay-as-you-go bills.

Real Incidents from the Field: The Scale of the Problem

This isn’t a theoretical problem. It’s causing massive, quantifiable waste across the industry.

- Codex CLI’s 198K Token Surprise (April 2025): A developer’s agent in

--full-automode made 29 API calls that each exceeded 150k tokens. The agent couldn’t know that runninggit diffon a large repository would explode into a massive token bill. - The $67 Database Disaster (March 2025): An OpenAI developer was billed for 5.2 million tokens in just two days. Their agent ran

SELECT * FROM logswithout realizing the table contained millions of rows. It couldn’t measure before acting. - Azure’s 2,691 Token ‘Hi’ (July 2025): Azure AI Foundry automatically injected huge OpenAPI schemas into every interaction. An agent’s simple ‘Hi’ response consumed nearly 3,000 tokens of invisible overhead.

- N8n’s 140K Token API Calls (June 2025): An N8n AI Agent hit rate limits because it included the full conversation history with every tool call, causing each request to balloon to 140,000 tokens without its knowledge.

- The AutoGPT File Loop Disaster: Agents would modify files and then re-read the entire modified codebase, creating catastrophic loops that consumed hundreds of thousands of tokens per iteration because they were blind to file sizes.

In every case, the pattern is identical: agents invoke tools without knowing the token consequences. They’re flying blind, and it’s costing a fortune.

From Prompt Engineering to Context Engineering

For years, we’ve focused on ‘prompt engineering’—crafting the perfect instructions for an AI. But this misses the point. You can write a perfect prompt to query a database, but if the agent is blind to the fact that the table has 10 million rows, it will still execute SELECT * and trigger a token disaster.

The real solution lies in context engineering.

I really like the term "context engineering" over prompt engineering.

— tobi lutke (@tobi) June 19, 2025

It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM.

As Tobi Lutke put it, it is ‘the art of providing all the context for the task to be plausibly solvable by the LLM.’ For tool use, the most critical missing context is the token cost. Context engineering is about designing systems that give an LLM everything it needs to accomplish a task, including the information and tools required.

The goal isn’t just to tell the agent what to do, but to give it the context to decide how to do it efficiently. This is the shift from writing instructions to architecting an environment where the agent can succeed.

Where Token Bombs Hide: Common Command Patterns

These token explosions aren’t edge cases; they are born from common, everyday commands that were never designed for non-human users.

- Unbounded Database Queries:

SELECT * FROM logswithout aLIMITclause is the most common culprit, capable of generating millions of tokens from a single command. - Continuous Streaming Commands: Tools like

tail -fordocker logs --followare the most dangerous, as they produce an infinite stream of tokens that never terminates on its own. - Recursive Filesystem Traversal: A simple

find /orls -laRcan be surprisingly costly, scanning an entire filesystem and generating hundreds of thousands of tokens. - Verbose Debug Modes: Enabling flags like

set -xor--debugis insidious, multiplying a command’s output by 10-100x and turning a 500-token script into a 45,000-token nightmare. - Cloud CLI Metadata Injection: AWS, Azure, and GCP CLIs often inject hidden metadata, schemas, and safety prompts, bloating every call with thousands of extra tokens.

Every journalctl, git log, terraform show, and npm ls is a potential token bomb waiting to explode.

Enter Context (ctx): Token-Aware Tool Invocation

Context (ctx) solves the token blindness problem by giving agents the one thing they need: visibility into token usage before they commit to a command.

For subscription users on plans like Claude Code Max, ctx means staying on the high-quality Opus model longer and getting more value from your investment. For pay-as-you-go users, it means preventing surprise bills and using powerful open-source tools without fear.

ctx works by wrapping any CLI tool’s output in a structured JSON envelope, transforming it into an AI-ready, token-aware interface.

{

"data": {

"input": "psql -c 'SELECT status, COUNT(*) FROM users GROUP BY status'",

"output": "active: 1250\ninactive: 750\npending: 125"

},

"metadata": {

"success": true,

"tokens": 42,

"duration": 127,

"bytes": 245

}

}This simple, structured output gives the agent the context it was missing, enabling it to make smarter, cost-effective decisions.

Key Features That Matter

- Universal Compatibility: ctx works with any CLI tool out-of-the-box:

psql,git,docker,kubectl,ls,grep—if it runs in your shell, it works with ctx. - Intelligent Resource Limits: Prevent runaway costs with precise controls. You can set hard limits on tokens, lines of output, or total bytes, and even apply timeouts to streaming commands.

# Prevent a token explosion from a large database query ctx --max-tokens 5000 psql -c "SELECT * FROM events" # Safely stream logs, automatically terminating after 1MB ctx --stream --max-output-bytes 1048576 tail -f /var/log/app.log - Machine-Readable Failures: When a limit is breached, ctx returns a structured error. This isn’t a failure; it’s data. The agent can see why the command was stopped and intelligently decide to refine its query or change its strategy.

- Privacy-First Design: A

--privateflag disables all telemetry and history recording for sensitive operations.

The Broader Implications: Building a Token-Grounded Future

ctx represents a fundamental shift. By preventing token disasters, we can move from simply executing commands to making intelligent, resource-aware decisions.

- The Codex CLI meltdown would have been avoided. Instead of blindly running

git difffor 198,000 tokens, an agent using ctx could have first runctx git diff --statto get a summary for just 50 tokens. - The $67 database disaster would have been prevented. Instead of

SELECT *, the agent could have first runctx --max-lines 1 psql -c "SELECT COUNT(*) FROM logs", seen the 10-million-row count, and decided to run an aggregate query for only 100 tokens.

Important clarification: ctx doesn’t solve every AI cost problem, like conversation bloat or context accumulation between messages. It is specifically designed to solve token blindness—the critical moment an agent interacts with an external command.

This aligns with the future of agentic AI, where systems must operate within budgets and make informed decisions about resource consumption. Our tools must become token-grounded.

A Sustainable Path for AI Development

As AI capabilities grow, so will the costs. Parallel agents, longer context windows, and more complex tasks will drive token consumption higher. The economics of AI agents are still in their early stages, but one thing is clear: blind tool use is unsustainable.

The choice is not between expensive, high-quality models and cheap, less capable ones. It’s between informed and uninformed tool invocation. We need to give our agents visibility to make intelligent decisions. Without it, premium subscribers will constantly hit usage limits, and pay-as-you-go users will face financial risk.

The future of AI development doesn’t have to cost a fortune or force constant downgrades. With token-aware tool invocation, we can build a sustainable, affordable, and powerful ecosystem. The age of token blindness must end.

ctx is open source and available today: github.com/slavakurilyak/ctx