For the ninth consecutive year, Rust is the most admired programming language on the planet. The Stack Overflow 2025 survey [1] shows a staggering 72.4% of developers desire it. Yet, only 14.8% actually use it. This isn’t a paradox; it’s a diagnosis. Rust is the language everyone wants, but few can afford to adopt.

Why the gap?

A significant part of Rust’s success is undoubtedly its build tool and package manager, Cargo. It was ranked the most admired tool in its category (71%), a testament to its stellar developer experience. But a great package manager isn’t enough to overcome the inertia of the real world.

The Admiration Gap: Why the Slow Adoption?

Jon Gjengset, in a recent interview [2], argues the reasons are less technical and more economic. The friction isn’t just in the code; it’s in the balance sheet.

- The Cost of Talent: ‘Adopting a new language is seen as a big cost because it is,’ Gjengset states. ‘You have a bunch of talent already at your company that know the existing codebase, the existing language and if you were to switch Rust, all of those people will suddenly need to learn Rust, which is a huge investment.’

- The Weight of Legacy: ‘…all your current code is not Rust. So you either need to translate it or over time you need to build up like replacement components… all of this is like a very costly endeavor…’

- The Barrier to Entry: ‘…the switching cost is so high that most companies are not willing to do it unless there’s a very very strong reason…’

Adoption is, by necessity, a slow, gradual process. But organizational inertia is only half the story. The other half is the developer’s daily reality: a steep learning curve and a compiler that tests your patience.

Compilation Slog: A Developer’s Nightmare

(XKCD: Compiling)

Rust’s slow compilation is a well-known pain point. A June 2025 blog post, ‘Why is the Rust compiler so slow?’ [3], provides a granular case study, reducing a project’s build time from four minutes to just nine seconds. The investigation uncovered several culprits:

- Link-Time Optimization (LTO): The

codegen_module_perform_ltostep accounted for a staggering 80% of the initial compilation time. Simply disabling ‘fat’ LTO cut the binary compilation from 175 seconds to 51. - LLVM’s Heavy Lifting: Even without LTO, LLVM’s optimization passes, particularly inlining (

InlinerPass), were a major bottleneck. - Large Async Functions: The compiler transforms

asyncfunctions into complex nested structures. These are notoriously difficult for LLVM to optimize efficiently. The solution involved breaking down large async functions and usingPin<Box<dyn Future>>to erase type information. - Generics and Monomorphization: When a generic function from a dependency is used, it gets re-optimized in the final crate. This re-optimization work adds up, bloating compile times. The experimental

-Zshare-genericsflag showed significant promise here. - The Build Environment: In a surprising twist, switching the Docker base image from Alpine (using

musl) to Debian slashed compile time from 29 seconds to 9. The memory allocator can have a dramatic impact.

This deep dive validates a long-standing complaint: Rust’s reliance on LLVM creates a sluggish feedback loop. As Brian Anderson, a co-founder of Rust, admitted in [4]: ‘For years Rust slowly boiled in its own poor compile times… It was 1.0. Those decisions were locked in.’

This stands in stark contrast to the philosophy behind Zig, where, as I discuss in my post on Zig, a fast feedback loop is the primary goal. By building their own x86 backend, they can re-analyze a half-million line codebase in just 63 milliseconds after an edit—a 222-fold speedup over a cold compile. Andrew Kelly, Zig’s creator, lists numerous reasons for moving away from LLVM [5], from its slow speed and bugs to the desire to escape a monoculture and innovate end-to-end.

Dependency Hell: Who Called One Thousand of Dependencies?

Rust’s dependency bloat is another source of friction. One developer [6] shared their experience with a ‘trivial’ webserver project:

Out of curiosity I ran tokei a tool for counting lines of code, and found a staggering 3.6 million lines of rust. Removing the vendored packages reduces this to 11136 lines of rust… How could I ever audit all of that code?

This isn’t just about code volume; it’s about trust. As another post [7] asks:

What if some foundational package uses unsafe, but uses it incorrectly? What happens when this causes problems for every package that uses that package?

Anxiety arises when the promise of safe abstractions is broken by incorrect unsafe internals. This erodes trust in the ecosystem. The community’s solution often circles back to adding more to the standard library—a philosophy central to Go’s design, as covered in my thoughts on Go—but the core team resists the maintenance burden. This is a mistake. The burden must be on the language stewards, not on a community forced to navigate a minefield of unauditable dependencies.

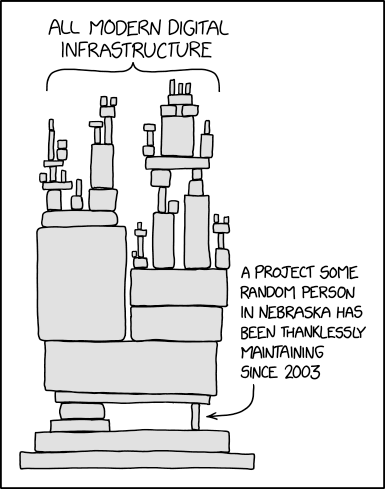

(XKCD: Dependency)

Async/Await: A Complex Beast

Graydon Hoare, the creator of Rust, expressed his reservations about the async/await model [8], feeling its complexity might not ‘quite pay for itself.’ He admits, ‘I never would have agreed to go in this direction ‘if I was BDFL’ — I never would have imagined it could even work.’

His skepticism is well-founded. As we saw in the compiler analysis, large async functions are a direct cause of compilation slowdowns. This is the intersection of conceptual complexity and practical friction.

Zig, for its part, removed async/await entirely in version 0.12.0. The reasons [9] are a mirror of Rust’s struggles: LLVM’s poor optimization of async functions, debugger failures, and the persistent ‘cancellation problem’—the challenge of reliably stopping a task and cleaning up its resources.

Safe/Unsafe: Anxiety Brews

unsafe is a necessary escape hatch for interacting with the operating system, FFI, or performance-critical data structures. But its presence undermines the core value proposition of Rust. When safe abstractions are built on a shaky foundation of incorrect unsafe code, anxiety brews and trust in the ecosystem erodes.

GameDev Requirements: Friction in Practice

The gamedev community provides a stark example of this friction. One developer, after three years with Rust and the Bevy engine, migrated back to C# [10], citing several issues:

- Iteration Speed: Rust’s verbosity and ‘compile-time friction’ were ill-suited for the rapid prototyping gameplay development requires.

- Ecosystem Churn: The Bevy engine’s frequent API changes created a significant migration burden with each update.

- Modding: The lack of a stable ABI in Rust made building a moddable architecture a daunting prospect.

Conclusion: Admired but Gated

Rust’s challenges are the flip side of its strengths. Its powerful type system and fearless concurrency come at the cost of complexity. Its performance guarantees are paid for with slow compilation.

The gap between admiration and adoption is the distance between appreciating a language’s ideals and living with its daily realities. For companies, the barrier is economic inertia. For developers, it’s the friction of a slow compiler and a complex mental model. Until these costs come down, Rust will likely remain what it is today: the most admired language that most people don’t use.

References

- Stack Overflow 2025 Developer Survey

- Rust 2025… Jon Gjengset Explains (Interview)

- Why is the Rust compiler so slow?

- The Rust Compilation Model Calamity

- why Zig is moving away from LLVM

- Rust Dependencies Scare Me

- A sad day for Rust

- The Rust I Wanted Had No Future

- Zig FAQ on Async

- Leaving Rust gamedev after 3 years