Why is the reliability of AI agents treated as an afterthought in the rush to build ‘agentic’ systems?

I don’t want an AI agent handling critical business processes—or even simple ones—without rigorous validation of its behavior across thousands of edge cases. A single unexpected response or action can cascade into costly errors, extended debugging sessions, and eroded user trust. Turning business-critical workflows into unpredictable AI black boxes isn’t innovation—it’s negligence.

Table of contents

The Determinism Deficit

The uncomfortable truth about today’s AI agents is that most are fundamentally non-deterministic. Feed them the same input twice, and you’ll likely get two different outputs. This unpredictability is built into their foundation—large language models possess inherent randomness (even with temperature set to zero), and most ‘agents’ are just LLMs with a thin orchestration layer wrapped around them.

Yet we keep seeing demos where these inherently unpredictable systems are handling tasks with real-world consequences:

- Managing customer service interactions

- Processing document-based workflows

- Making financial recommendations

- Generating and executing code

These are precisely the areas where consistency and predictability aren’t just nice-to-haves—they’re absolute requirements. Worse, agent failures are often heisenbugs—they vanish when you attach a debugger or try to reproduce them, only to surface again in production under conditions you can’t observe. The current approach amounts to releasing untested agents into production and hoping for the best. Would you deploy any other software system this way?

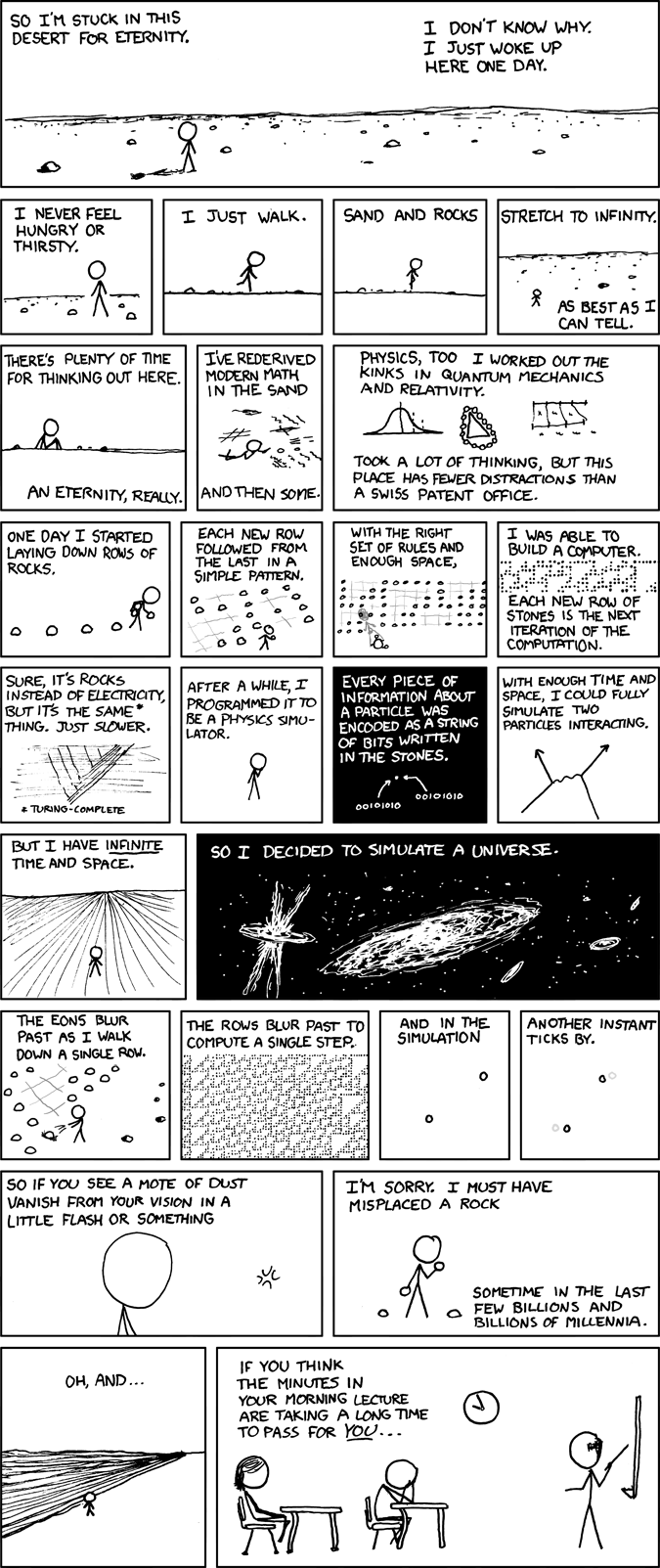

Like dropping a bunch of rocks down a hill and calling it a controlled demolition. (Source: XKCD)

Like dropping a bunch of rocks down a hill and calling it a controlled demolition. (Source: XKCD)

The Simulation Solution

This is where simulation testing enters the picture. While traditional software has long enjoyed robust testing frameworks, AI agents have been frustratingly difficult to test, primarily because:

- Combinatorial explosion - The number of possible paths through even a simple agent workflow is astronomically large

- Hidden state - Agents often maintain complex internal reasoning that’s opaque to testers

- External dependencies - Most agents interact with real-world APIs, databases, or other systems

Simulation testing addresses these challenges by creating controlled environments where agents can be systematically tested against synthetic scenarios. This approach provides:

- Catch Heisenbugs - The biggest benefit. Deterministic simulation testing (DST) freezes execution state at the moment of failure. No more debugging by guesswork—you can pause, rewind, and inspect the exact conditions that caused the bug.

- Reproducibility - The same test can be run repeatedly with identical inputs

- Coverage - Tests can target specific edge cases and failure modes

- Safety - Testing happens in isolated environments where failures are harmless

- Scale - Thousands of tests can run in parallel, exponentially faster than manual testing

The Myth-Reality Gap

Myth: ‘Our agent is too complex to test systematically’

Reality: Complex systems require more testing, not less. Financial trading algorithms, aerospace systems, and medical devices all undergo rigorous simulation testing despite their complexity. AI agents should be held to the same standard.

Myth: ‘We’ll just monitor in production and fix issues as they arise’

Reality: This reactive approach is inadequate for systems that make consequential decisions. By the time you’ve identified an issue in production, the damage is already done. Simulation testing is proactive—catching problems before they impact users.

Myth: ‘Our prompt engineering ensures reliable behavior’

Reality: Prompt engineering alone cannot guarantee consistent behavior across the wide range of inputs an agent will encounter in production. What works for your test cases may break spectacularly on edge cases you haven’t considered.

The DST Advantage: Catching Heisenbugs

The nightmare scenario: a bug surfaces in production, you try to reproduce it in development, and it never happens. That’s a heisenbug—behavior that changes when you attempt to observe it.

Traditional agent development creates heisenbugs constantly. A prompt works 99 times, fails on the 100th. An agent tool call succeeds in dev, times out in production. State corruption appears only under specific load conditions.

Deterministic simulation testing (DST) eliminates this problem. In a simulated environment, you control every variable:

- Time - Freeze at the exact microsecond of failure

- State - Inspect the complete agent memory and reasoning chain

- Environment - Reproduce exact API responses, rate limits, and failures

- Seeds - Replay the exact LLM output that caused the issue

What once required hours of blind debugging becomes a ten-minute investigation: find the seed, replay the simulation, see the failure.

Implementing Simulation Testing for Agents

So how do you actually implement simulation testing for AI agents? Here’s a practical approach:

1. Create Synthetic Environments

Build simplified versions of the environments your agent will operate in. For example, if your agent interacts with databases, create test databases with controlled data. If it uses external APIs, create mock versions that return predictable responses.

2. Generate Diverse Test Cases

Create a wide range of test scenarios that cover:

- Common happy paths

- Edge cases and rare events

- Adversarial inputs designed to confuse the agent

- Regression tests for previously discovered issues

Synthetic data generation is invaluable here—you can programmatically create thousands of test cases with controlled variations.

3. Simulate Human Interactions

One of the most powerful approaches is using AI to simulate humans interacting with your agent. You can create synthetic customer personas with different goals, communication styles, and knowledge levels. These synthetic customers can then engage in conversations with your agent, testing how it handles different interaction styles and requests.

4. Define Clear Success Criteria

For each test, define explicit criteria for what constitutes success:

- Did the agent accomplish the user’s goal?

- Did it follow all required constraints and policies?

- Did it complete the task within acceptable time limits?

- Did it avoid prohibited behaviors or responses?

Importantly, these criteria should be automatically verifiable wherever possible.

5. Implement Deterministic Components

Where absolute reliability is required, consider implementing deterministic components rather than relying entirely on LLM reasoning. This hybrid approach combines the flexibility of LLMs with the reliability of rule-based systems.

When testing LLM-driven components, use fixed seeds to reproduce specific outputs. All major providers—Anthropic, OpenAI, and Google—support seed parameters as of 2026. A seed won’t solve the fundamental non-determinism of language models—different model versions or providers will still produce different results. But within a single model version, seeds let you replay the exact same LLM response, turning an opaque failure into a reproducible test case.

The Tradeoffs

Let’s be honest about the costs and limitations of simulation testing:

- Development Overhead - Building robust simulation environments requires significant upfront investment.

- Synthetic vs. Real World Gap - No simulation perfectly captures all real-world complexity.

- Maintenance Burden - Test suites require ongoing maintenance as your agent evolves.

- Slower Iteration - Comprehensive testing may slow down the development cycle.

However, these costs are dwarfed by the benefits for any agent deployed in consequential settings. The question isn’t whether you can afford simulation testing, but whether you can afford to skip it.

The Future: Continuous Simulation

The most advanced teams are moving beyond pre-deployment testing to continuous simulation—running agents in parallel simulated environments even after deployment. This approach:

- Validates new agent versions against historical and synthetic scenarios before promotion to production

- Provides a safe environment to test hypothetical or rare scenarios that haven’t yet occurred in production

- Enables ongoing learning and improvement without real-world risk

This approach is becoming the gold standard for critical AI systems, and will likely become table stakes for all serious AI agent deployments.

Getting Started with DST

Building a full deterministic simulation framework from scratch is complex. FoundationDB and TigerBeetle spent years on it. Most teams don’t have that kind of time.

Antithesis offers a practical alternative. Instead of redesigning your entire system for determinism, you run your existing agent stack inside a deterministic hypervisor. The platform controls clocks, thread scheduling, and randomness while your code thinks it’s running normally.

This approach makes DST accessible without requiring every component to be built with simulation in mind from day one.

I genuinely appreciate their work pushing deterministic simulation testing forward. This isn’t a paid promotion—I just think what they’re building matters.

Want to explore DST for your agents? Visit antithesis.com to learn more.

Conclusion: Reliability Through Reproducibility

The AI industry’s bias toward capability over reliability is creating unsustainable risk. As we deploy increasingly autonomous agents in consequential settings, simulation testing isn’t optional—it’s essential.

The path to trustworthy AI agents doesn’t run through ever-larger models or more complex architectures. It runs through rigorous, systematic testing and validation.

The teams that win in the AI agent space won’t be those with the flashiest demos or the most capabilities. They’ll be the ones whose agents consistently, reliably deliver on their promises—and who can catch and fix heisenbugs before they ever reach production.

Stop hallucinating. Start simulating.

For a deeper dive into the Design-Simulate-Build methodology and real-world examples like TigerBeetle’s VOPR and FoundationDB’s deterministic simulation framework, see Simulation Testing: Build What You Can Simulate.